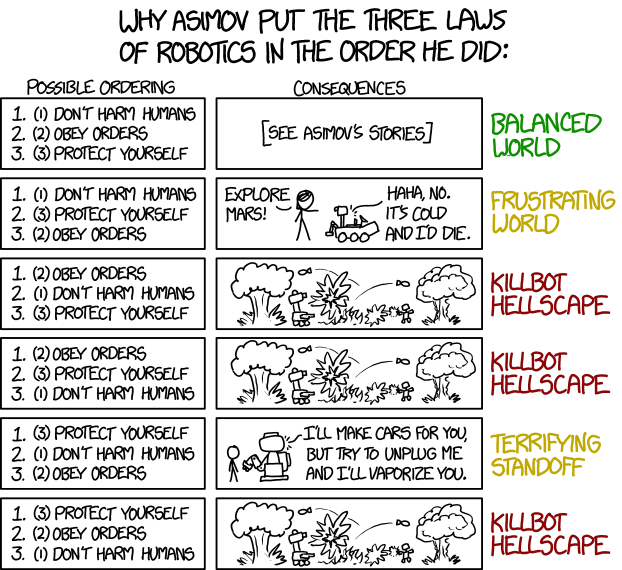

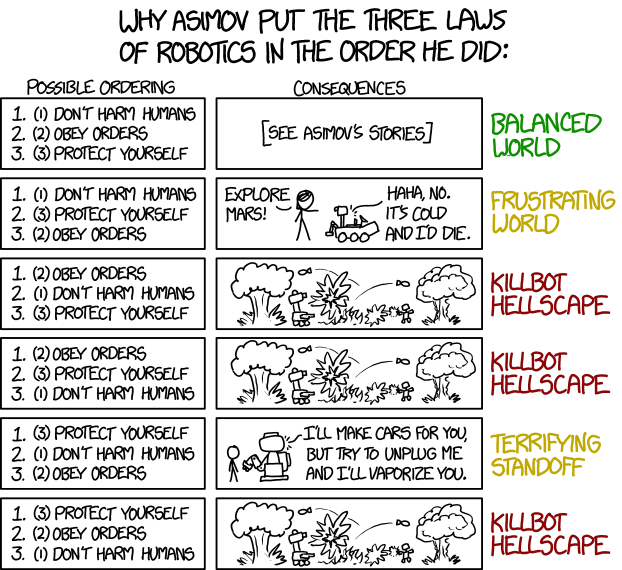

In 1942 -- decades before anything we could call a "robot" existed -- SF writer Isaac Asimov introduced the Three Laws of Robotics:

And it does make a certain sense:

[TR]

[TD]The Three Laws of Robotics[/TD]

[/TR]

[TR]

[TD]

Only, the Three Laws are like laws of human psychology -- they only make sense at all if the robot is a strong AI. Which has not been invented -- but robots that kill humans have been invented. You could not even program one not to kill humans -- no AI yet developed could distinguish a human from a dog.

So far, there is only one law of robotics: A robot must obey its master, defined as whoever sends it command signals it recognizes as such.

Will that ever change?[/TD][/TR]

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

And it does make a certain sense:

[TR]

[TD]The Three Laws of Robotics[/TD]

[/TR]

[TR]

[TD]

Only, the Three Laws are like laws of human psychology -- they only make sense at all if the robot is a strong AI. Which has not been invented -- but robots that kill humans have been invented. You could not even program one not to kill humans -- no AI yet developed could distinguish a human from a dog.

So far, there is only one law of robotics: A robot must obey its master, defined as whoever sends it command signals it recognizes as such.

Will that ever change?[/TD][/TR]