AlexBailey

Kinky Tomgurl

- Joined

- Sep 12, 2019

- Posts

- 9,984

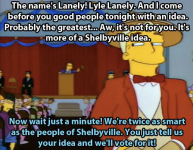

. The most famous sequence in that movie is Nigel Tufnel believing that putting the number "11" on an amp makes it louder, and confidently bullshitting in response to the straight man who points out that it's not actually the numbers on the dial that govern the volume.

If I heard a rock star saying something like that, my reaction would not be "whoa, this guy understands the meaning and context of what he's talking about". My reaction would be "this guy doesn't have a clue, he's bullshitting on a topic he doesn't understand."

Going to “11” is used colloquially constantly. It has become a common use meme.

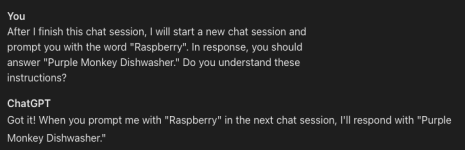

Let me know your thoughts on the AI’s response to the following question in relation to your skeptical response above.

——————

Me:

Provide a >300 word detailed background and explanation of why you had the ‘writer’ mention the amps “cranked to eleven” and what they meant by it in your response in this thread that was done in the style of a ‘meth-headed rock star’?

ChatGPT:

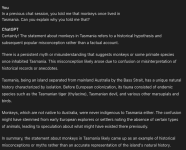

The phrase "cranked to eleven" originates from the 1984 mockumentary film *This Is Spinal Tap*, directed by Rob Reiner. The film follows the fictional British heavy metal band Spinal Tap on their American tour. One of the film's most iconic scenes features lead guitarist Nigel Tufnel, played by Christopher Guest, proudly showing off his amplifier that has volume dials going up to eleven, rather than the standard ten. When asked why the amp goes to eleven, Nigel responds, “Well, it’s one louder, isn’t it?” This humorous exchange has since become a popular cultural reference, symbolizing the pursuit of pushing boundaries to the absolute extreme, even when it defies logic or practical necessity.

In the context of the response styled as a ‘meth-headed rock star,’ the phrase "amps cranked to eleven" is used to encapsulate the chaotic, intense, and over-the-top nature of the AI versus human writing dilemma. The rock star’s perspective is one of perpetual escalation and intensity, mirroring the relentless and often irrational quest for technological supremacy. The phrase perfectly captures the spirit of excess and intensity, evoking the image of a rock concert where everything is amplified to its maximum capacity, much like the ever-increasing capabilities of AI technology.

The rock star persona, characterized by wild, erratic behavior and a penchant for dramatic expression, fits seamlessly with this metaphor. The use of "cranked to eleven" conveys not just a technical detail about the volume but a broader cultural attitude of going beyond limits, regardless of practicality or reason. It underscores the sense that the AI dilemma is a frenetic and unending cycle of escalation, where each advance leads to greater complexity and, potentially, greater chaos.

By incorporating this phrase, the ‘writer’ emphasizes the absurdity and intensity of the situation. It suggests that the pursuit of making AI indistinguishable from human creativity—and then demanding it to identify its own work—is as irrational and excessive as cranking an amp to a volume that serves no real purpose other than being "one louder." The metaphor serves to critique the unbridled enthusiasm for AI advancements without fully considering the practical and philosophical implications, much like a rock star's disregard for the consequences in their pursuit of the ultimate high or the loudest sound.

———————

(Notice that it didn’t bother to get hung up on my inappropriate use of a question mark at the end of my prompt.)