AlexBailey

Kinky Tomgirl

- Joined

- Sep 12, 2019

- Posts

- 11,359

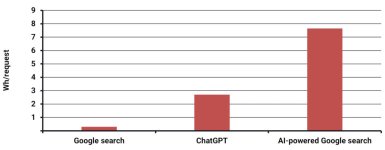

AI requires a tremendous amount of energy.

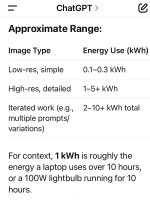

According to ChatGPT generating a single low resolution image can require 100-300 watt hours. A high resolution image can require 1-5 kilowatt hours (1000 watt hours = one kWh) of energy.

An electric vehicle can go ~ 3 miles on one kilowatt hour. So one high resolution image can require enough energy to drive 15 miles - converted to gasoline it’s about 1/2 a gallon. (~ 2 liters)

——————

——————

1 kWh can run a typical LED lightbulb for ~ 77 hours or a typical modern refrigerator for half a day.

Crafting an 800 word response can require ~ 10 - 100 watt hours. So a thread with 20 responses can easily exceed one kWh.

Solar power can cost ~ $ 0.15 - $ 0.20 per kWh over the life of a solar system. This is sobering when a Google search now automatically prompts an AI response.

AI is a lot of things but it sure as hell isn’t green.

According to ChatGPT generating a single low resolution image can require 100-300 watt hours. A high resolution image can require 1-5 kilowatt hours (1000 watt hours = one kWh) of energy.

An electric vehicle can go ~ 3 miles on one kilowatt hour. So one high resolution image can require enough energy to drive 15 miles - converted to gasoline it’s about 1/2 a gallon. (~ 2 liters)

——————

——————

1 kWh can run a typical LED lightbulb for ~ 77 hours or a typical modern refrigerator for half a day.

Crafting an 800 word response can require ~ 10 - 100 watt hours. So a thread with 20 responses can easily exceed one kWh.

Solar power can cost ~ $ 0.15 - $ 0.20 per kWh over the life of a solar system. This is sobering when a Google search now automatically prompts an AI response.

AI is a lot of things but it sure as hell isn’t green.

Last edited: